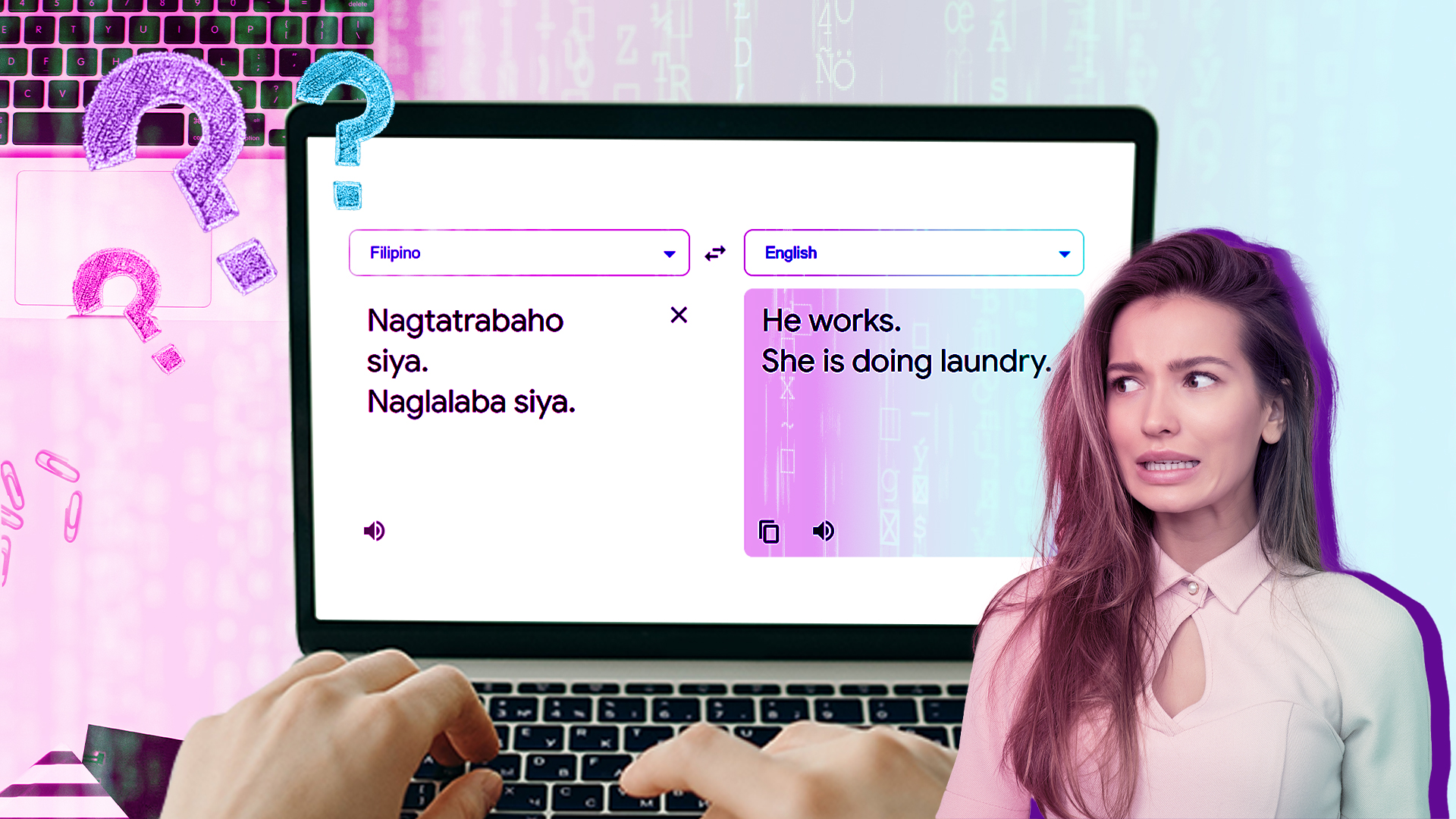

The Filipino language is gender-neutral, so why are people saying that Google Translate is enforcing gender roles?

Some people have pointed out a big flaw in Google Translate’s AI technology — it doesn’t consider gender biases. The discussion began when a Finnish user on Twitter shared a screenshot on the platform. While their language doesn’t have gendered pronouns, the computer-generated English translations still managed to categorize activities based on stereotypes.

Examples include, “He works,” and, “She takes care of the children.”

In Finnish we don’t have gendered pronouns.

These translations from our gender-neutral language into English reveal a lot of bias in the world and in tech RT @annabrchisky: Maailma ei ole vielä valmis. Eikä ole teknologiakaan.#InternationalWomensDay pic.twitter.com/EzuTYApkaE

— Vuokko Aro (@vuokko) March 9, 2021

With nearly 7,000 likes, the tweet gained enough attention that people from around the world began to test it out as well. A Pinoy user shared her own results when translating Filipino to English. As expected, the outcome was similar if not exactly the same.

This sparked a discourse under her Twitter thread. Some suggested that “they” would be an appropriate gender-neutral pronoun to feed into its code instead.

We also can’t exactly blame Google Translate’s AI technology for its faults. The product was trained to work based on the data given to them, which is often riddled with gender biases. This reveals an even bigger problem in the tech industry. Developers themselves aren’t taking societal issues and cultural nuances into account.

Tough to find a word that would match. “Siya” refers to a single person. “They” refers to morethan one.

Maybe the closest is “That person”.

I can’t really blame Google’s translation AI, it was fed with books and documents to learn how languages work on its own.

— CitizenCass 🐈 🐾 (@CitizenCass) March 10, 2021

The issue has actually been a topic of discussion for a while now in the industry. In 2019, the head of Google Translate, Macduff Hughes, addressed the concerns in an interview. He acknowledged that machine learning services do often reflect societal biases, in turn even amplifying them.

One initiative they’ve been working on to combat that is by offering gender-specific translations. While it is only available for select languages, Google Translate can show both feminine and masculine translations for words. The feature was first developed for French, Italian, Portuguese, Spanish, and Turkish.

Another thing they are trying to tackle is document translation. They noticed that if you take a Wikipedia article about a woman in another language and translate to English, you will most likely get male pronouns. The problem with this is that sentences are often translated in isolation, disregarding the context of the document.

So while Google Translate is flawed, it’s not like they’re not doing anything about it. What’s important is that the tech industry continues to progress in making the world more accessible and inclusive.

Other POP! stories you might like:

Piers Morgan’s hostility for Meghan Markle stems from ‘ghosting drama’

Transparent wood could replace glass as a sustainable alternative

Step up your Tinder game, this girl made a Google Form for her matches

Meghan Markle shares the dark truth behind this photo from 2019