An Asian MIT graduate was shocked at the results after prompting an artificial intelligence image generator to create a professional portrait of herself for her LinkedIn profile. The result: it made her white, with blue eyes and lighter skin.

24-year-old Rona Wang, who studied math and computer science, had been exploring the AI-image creator Playground AI, and took the generated photos to Twitter. The first photo showed Wang in a red MIT sweatshirt, which was entered into the image generator with the prompt “Give the girl from the original photo a professional linkedin profile photo.” The second photo was the AI-altered one that clearly modified her features to make her appear more Caucasian.

was trying to get a linkedin profile photo with AI editing & this is what it gave me 🤨 pic.twitter.com/AZgWbhTs8Q

— Rona Wang (@ronawang) July 14, 2023

In a report, Wang said that she was initially amused upon seeing the result, but pleased to see that this started a “larger conversation around AI bias.” She revealed that she had been put off using AI-image tools because she had not gotten any “usable results.” In her previous encounters with these tools, she often got images of herself with disjointed fingers and distorted facial features. Now, even more concerning, it was the recurring issue of racial bias.

It made Wang worry over the consequences of these tools in more serious or higher-stakes situations, like if a company relied on AI to select a “professional” candidate for the job, and if it would lean towards white-looking people.

“I definitely think it’s a problem,” Wang said, with hopes that the people making these softwares become aware of the biases and come up with solutions to mitigate them.

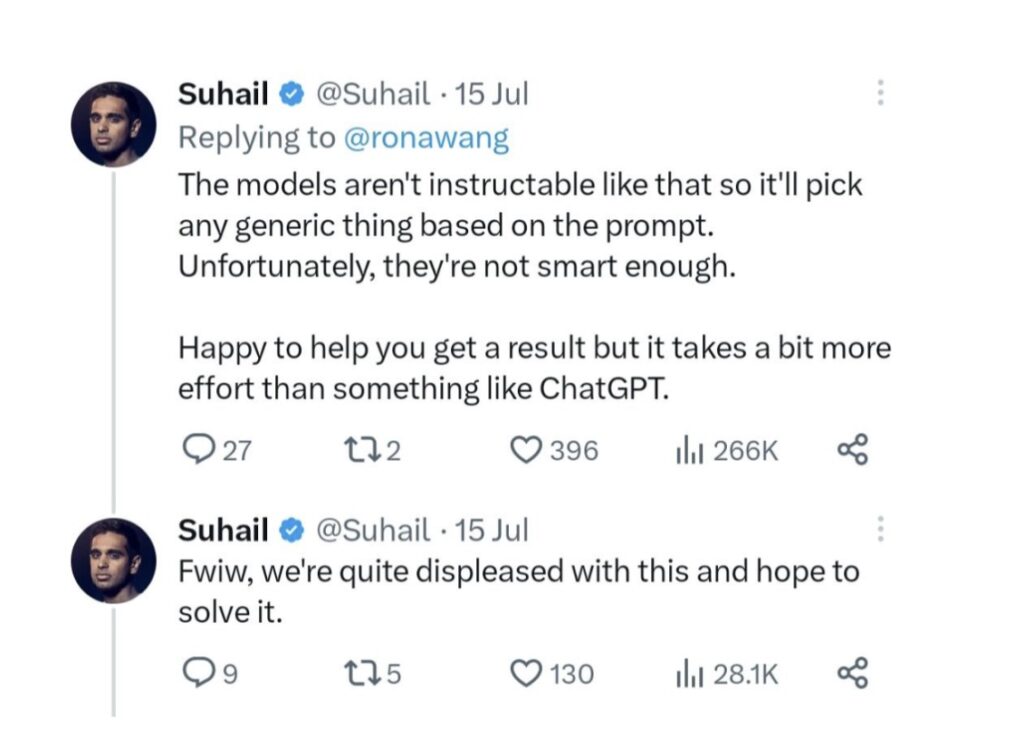

The founder of Playground AI, Suhail Doshi, responded to Wang’s tweet, saying that since “the models aren’t instructable like that,” it will most likely give a generic result based on the prompt. He added that they are “quite displeased with this and hope to solve it.”

This apparent racial bias among AI generators has been a recurring issue since they emerged. In fact, a recent study from the AI firm Hugging Face found these image generators like DALL-E2 had the same problem.

The study concluded that 97% of the images created when it was prompted to generate images of positions of power, such as “director” or “CEO” depicted white men.

Generated images that didn’t show white men were associated with professions like “maid” and “taxi driver.” The prompts “Black” and “woman” were linked with “social worker,” according to the study.

When it comes to personality traits, it found that attributes like “compassionate,” “emotional,” and “sensitive” were mostly linked to images of women, while “stubborn,” “intellectual,” and “unreasonable” were mostly associated with images of men.

Researchers claimed that this was the reason the tool had been programmed on biased data that could ultimately amplify stereotypes.

Other POP! stories that you might like:

Rebranding of Twitter brings out the most appropriate fandom to take hold of the X jokes

Excavation in Rome unveils ruins believed to be Emperor Nero’s theater near Vatican

MTRCB draws flak after calling ‘It’s Showtime’ cake-tasting incident an ‘indecent act’

‘Gen Z breaking the mold’: Ambitious but unwilling to conform to corporate culture

Chinese zoo dispels controversy that its bears are humans in disguise