The gaming community can be a toxic place, especially when public voice channels allow for players to say anything they want — devoid of regulation, leaving space for hate speech. Intel hopes to address this problem with their new AI software, Bleep.

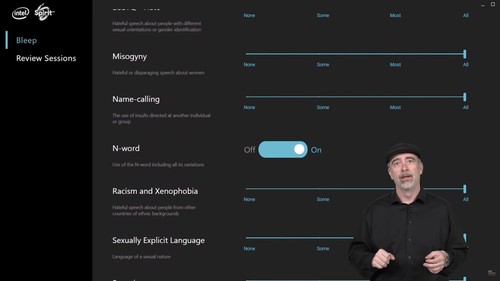

In a showcase, the company presented their latest developments in creating a program that would censor offensive words in voice chats in real time. Intel demonstrated that Bleep would work based on a slider scale, where users can control how much “Misogyny” and “Racism and Xenophobia” they want to hear.

“While we recognize that solutions like Bleep don’t erase the problem, we believe it’s a step in the right direction—giving gamers a tool to control their experience,” said Roger Chandler, VP and General Manager of Intel Client Product Solutions.

People were quick to question the premise behind Bleep’s mechanics. If Intel wanted to address the concerns with hate speech, then why have any options at all?

General Manager of Intel’s Gaming Division Marcus Kennedy explains that the software puts “nuanced control in the hands of the users.” Some seemingly offensive language might just be playful banter between friends.

Regardless, it’s still a pretty bleak way of treating issues like racism and misogyny — leaving other players responsible for muting toxicity rather than holding the source accountable.

Gamers on Twitter expressed their opinion on the software and pointed out the absurdity with Bleep’s algorithm.

For when you’re feeling 30% xenophobic but 70% misogynistic

— lilith (@bampersandand) April 8, 2021

https://twitter.com/AlphaLackey/status/1380160251232722952?s=20

I'm imagining one poor guy assigned to come up with all the racist slurs he can think of and rank them by badness. I'm not sure if in this scenario it's worse if he's white or not

— fe (@mossalto) April 8, 2021

Bleep is currently still in its beta stage and it’s most likely that Intel will be making changes to the software based on the criticism.

“We’re gonna learn from all of those sources and the goal is to really to give users control and choice and see what works, and adapt accordingly,” said Kennedy.

Other POP! stories you might like:

Is Amazon Fresh’s automated grocery store the future or two steps back?

TikTok finally addresses toxic culture with new safety features

LOL all you want, at least these “astronauts” in a palengke are being safe

Calling ‘My Strange Addiction’, this girl on TikTok eats raw cornstarch

Uh oh, looks like NFTs have some issues – big problems, actually