While the generative artificial intelligence (AI) chatbot is known to be a greatly convenient source providing its users instant answers in all their queries, studies have shown that ChatGPT’s “power users,” particularly those who strongly fixate on the conversational bot, are becoming addicted and heavily reliant to its existence.

Internet addiction has become a persistent occurrence over the decades as technology continues to redesign new and improved systems that appeal to many because of its efficiency in modern society, but with the presence of AI today, there has been more harm recorded from researchers that show people’s “withdrawal symptoms, mood swings, and temporary loss of control” in its usage.

OpenAI and MIT worked together in a joint study titled “Early methods for studying affective use and emotional well-being on ChatGPT” and surveyed thousands of its users.

The first group of researchers (OpenAI) focused on the analysis of “nearly 40 million interactions between users and ChatGPT,” additionally including surveys to implore opinions of their participants with the software.

MIT, on the other hand, worked in their MIT Media Lab and gathered almost a thousand participants over a period of four weeks in a randomized controlled trial (RCT), and reviewed how the chatbot’s features, namely, voice mode and conversation type, affected users’ “moods and emotional well-being.”

In their joint summary, the researchers stated, “Our findings show that both model and user behaviors can influence social and emotional outcomes. Effects of AI vary based on how people choose to use the model and their personal circumstances.”

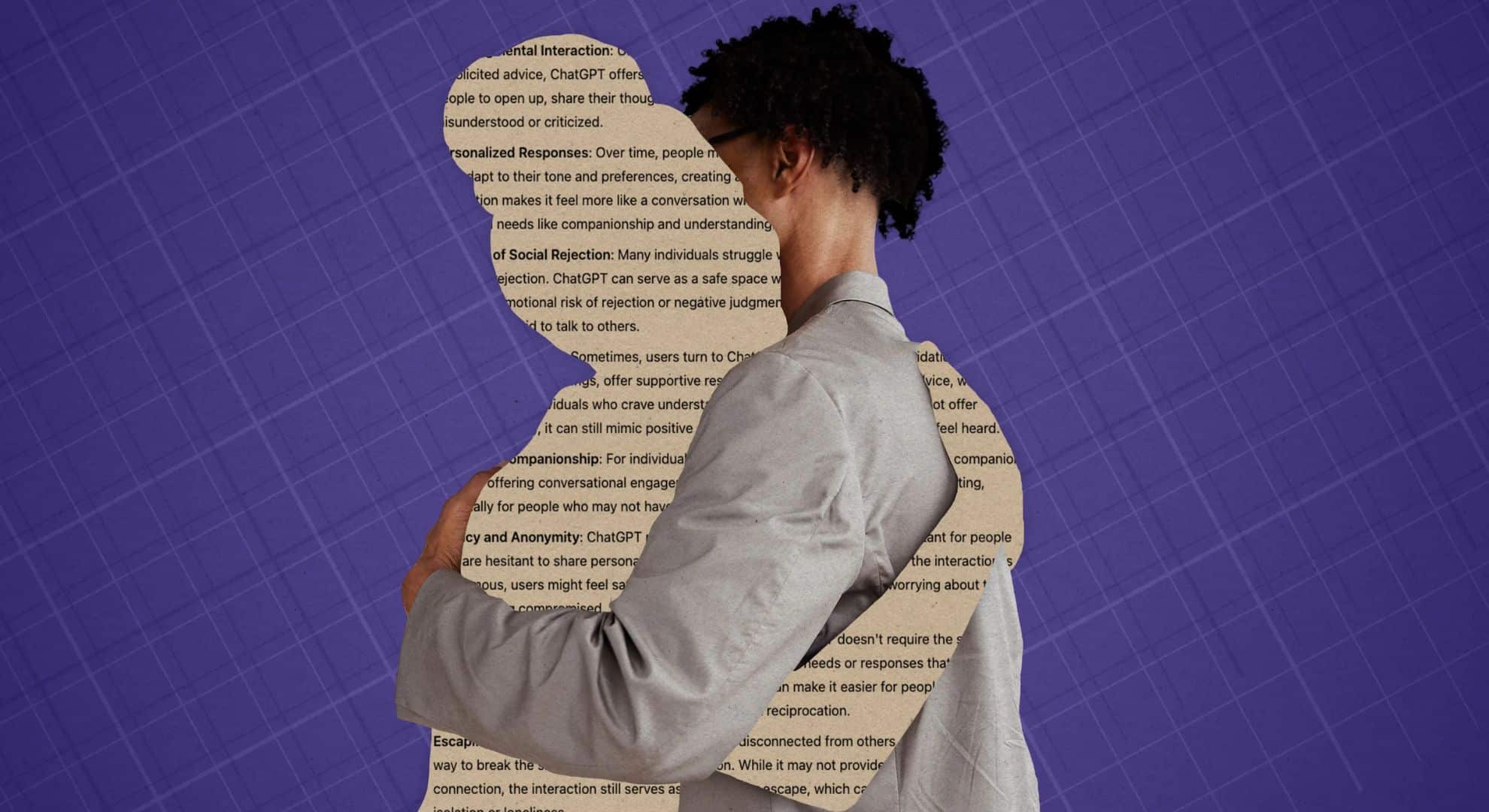

Although it was mentioned that a majority of the participants “did not engage emotionally with ChatGPT,” those who would use the chatbot for longer periods of time had seemingly considered the model to be a “friend.” Additionally, the participants who chatted with the bot the longest “tended to be lonelier and get more stressed out over subtle changes in the model’s behavior, too.”

Given these results, MIT and OpenAI encouraged other researchers to create more studies on human-AI interactions. OpenAI additionally mentioned that they planned to minimize potential harm through updating their “… Model Spec to provide greater transparency on ChatGPT’s intended behaviors, capabilities, and limitations.”

Other POP! stories you might like:

Video game allows pet owners to imagine a reunion with their fur babies in heaven

Rising TikTok star Joshua Blackledge passes away at 16

Plane forced to reroute flight after pilot reveals he forgot to bring his passport

Head writer of ‘Courage the Cowardly Dog’ David Stephen Cohen’ passes away at 58

NJZ halts activities following court’s grant to ADOR’s injunction suit